How to use the Discngine Pipeline Pilot Data Functions

Data Functions are initially calculations based on S-PLUS, open-source R, SAS®, MATLAB® scripts, or R scripts running under TIBCO Enterprise Runtime for R for Spotfire, which you can make available in the TIBCO Spotfire® environment. With the Connector, you can base the Data Functions on Pipeline Pilot protocols.

In this tutorial you will learn how to register and use Pipeline Pilot Data Functions in TIBCO Spotfire®.

Materials

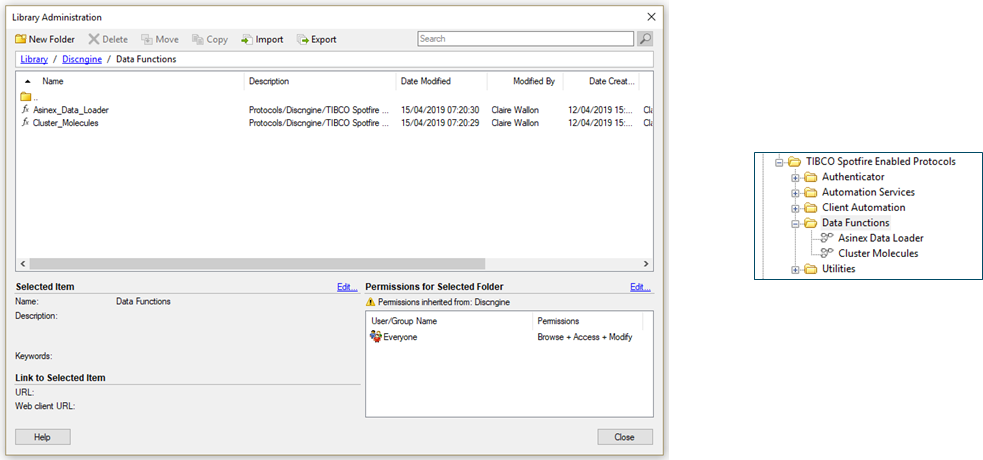

Two Pipeline Pilot Data Functions are provided in the package for this tutorial. They are already saved in the library you imported during the installation, the corresponding Pipeline Pilot protocols are in the collection.

If you have sufficient privileges in TIBCO Spotfire® you can view these Data Functions in the library administration, or the protocols in Pipeline Pilot:

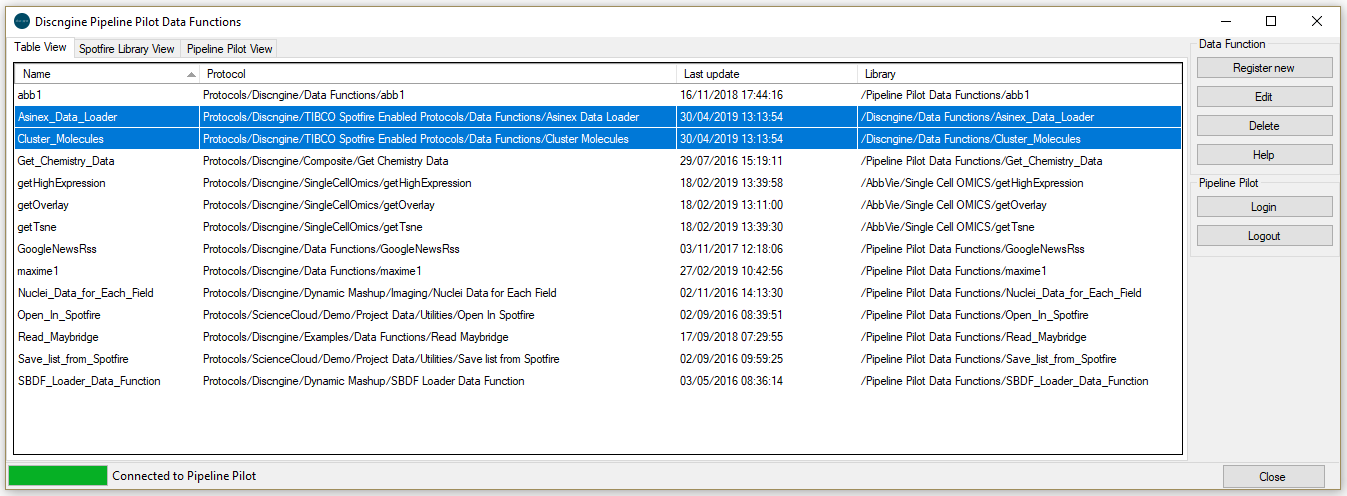

1. Registration of Pipeline Pilot Data Functions

As the two Data Functions are provided in the Connector package, they are already registered:

To register new ones:

-

Open the Tools > Pipeline Pilot Data Functions...

-

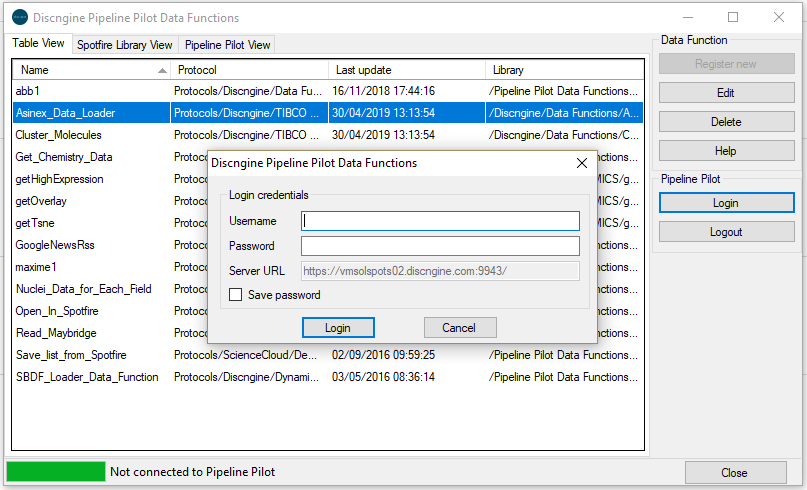

Log in to the Pipeline Pilot server using your Pipeline Pilot credentials:

The server URL is the one set in the Discngine Data Function preferences.

-

Click on "Register new" and browse the Pipeline Pilot hierarchy to select the protocol.

-

A pop-up window opens, displaying information about the Data Function and the protocol. You can also assign a few keywords to the Data Function, so that you can easily search for them later. You can also define whether you want to allow caching for this Data Function. The library path is set automatically, using the value of the Discngine Data Function preference, but you can change it. Finally, the input and output of the protocol are reminded.

2. Run Pipeline Pilot Data Functions

Load data

In TIBCO Spotfire® Analyst:

-

Starting without opened analysis in TIBCO Spotfire®, click on Insert > Data Function > From Library....

-

A window opens, exposing all the available registered Data Functions. Find the "Asinex_Data_Loader" Data Function and click "OK".

-

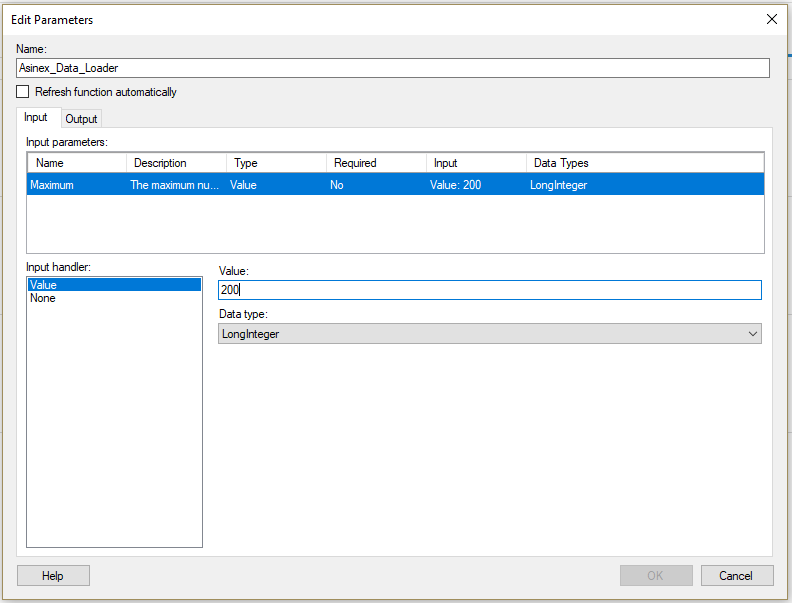

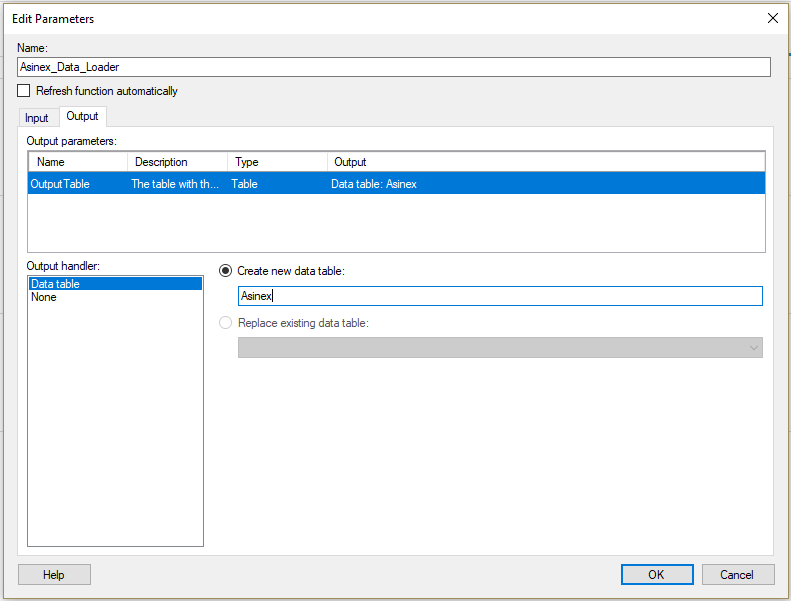

Here you can define the input and output parameters.

-

Set the value 200 for the input parameter "Maximum" so the first 200 records of the Asinex data set will be loaded.

-

In the "Output" tab, set "Asinex" for the name of the data table that will contains the results of the Data Function, that is the first 200 records of Asinex.

-

-

Click "OK".

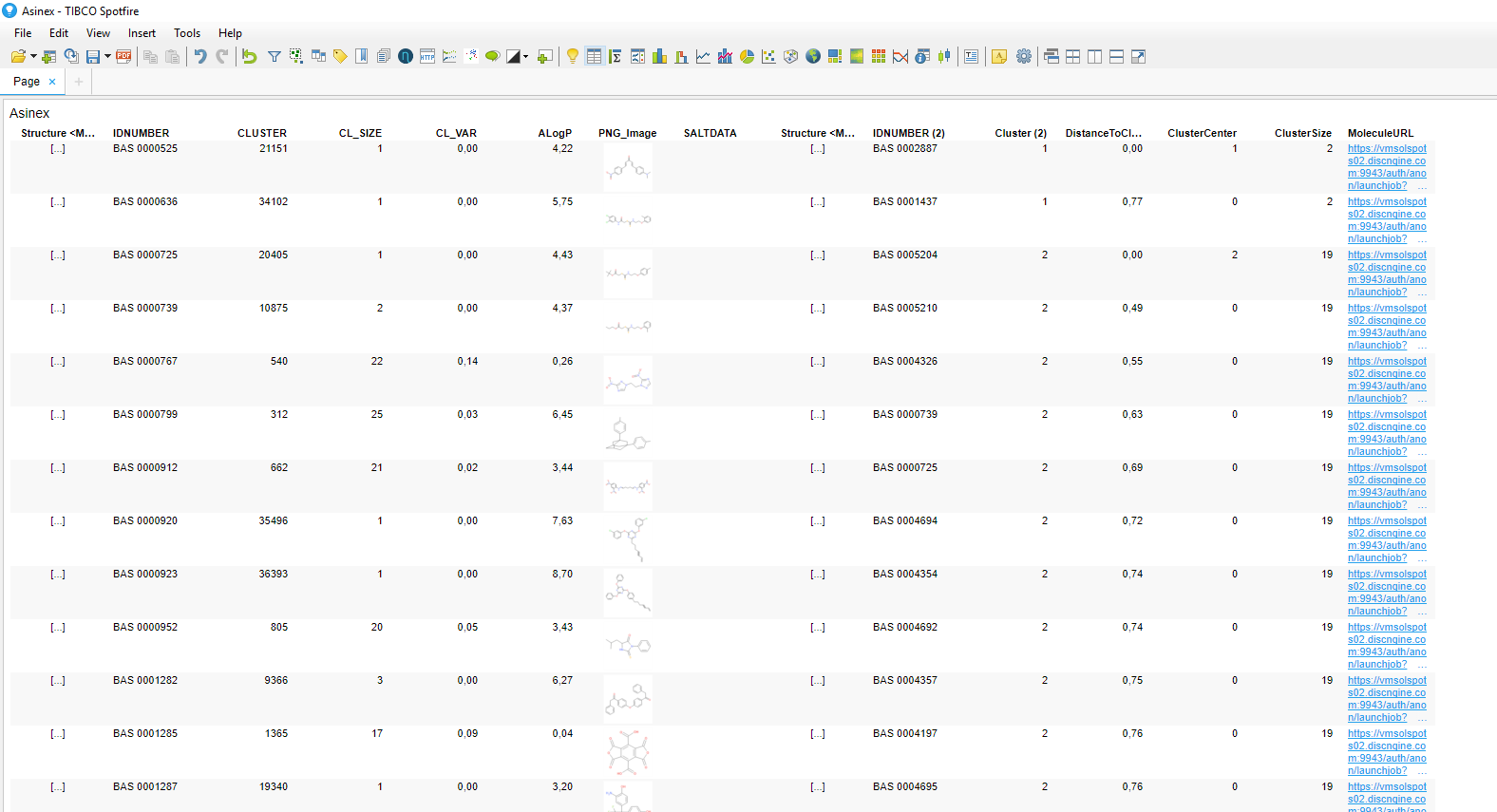

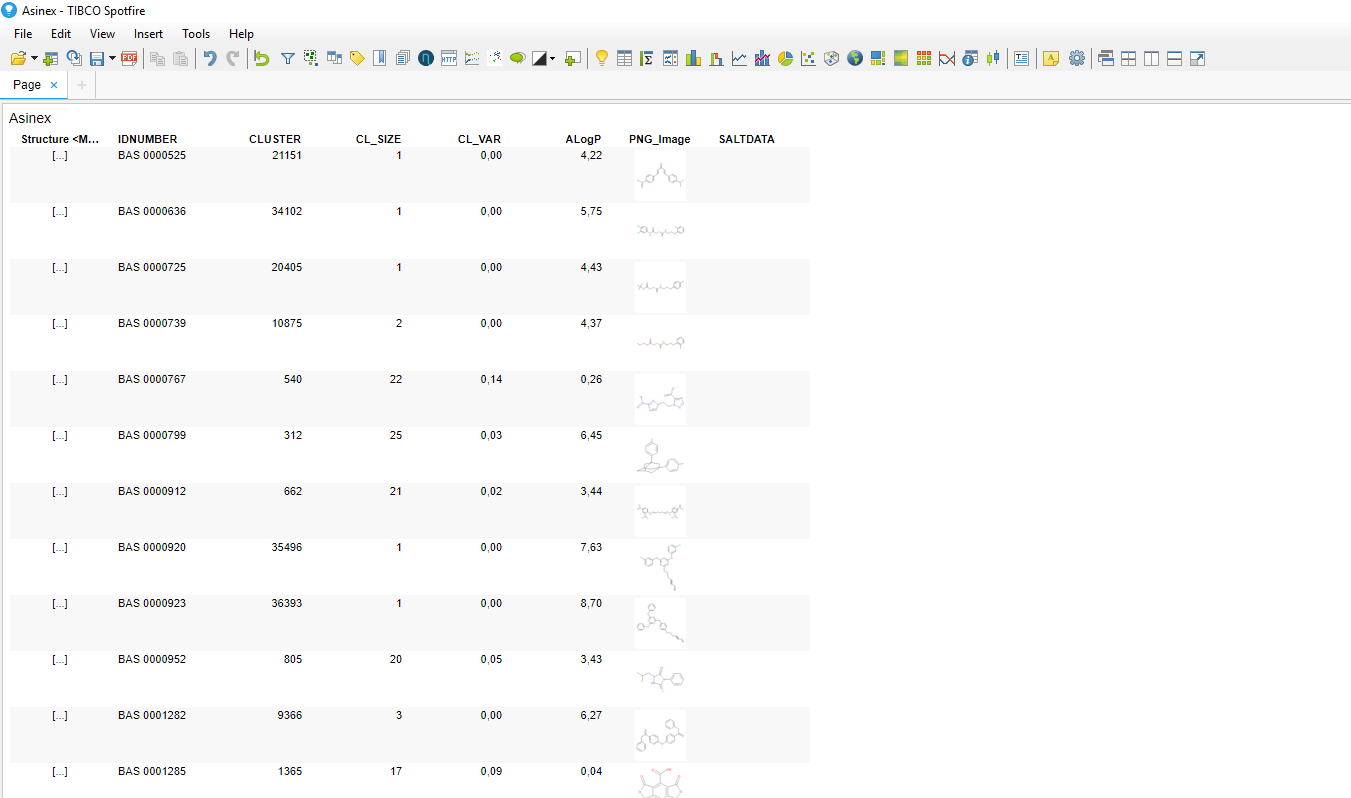

Data are now added to the analysis.

On the Pipeline Pilot side, the protocol "Asinex Data Loader" simply reads the Asinex data set, computes the ALogP, generates the molecule image in the PNG format, and writes the results in an SBDF file. It should be noted that:

- the input parameter "Maximum" is a parameter of the protocol,

- the protocol must write an SBDF file in the job folder. It is then this file that is read by TIBCO Spotfire® to load data in the analysis.

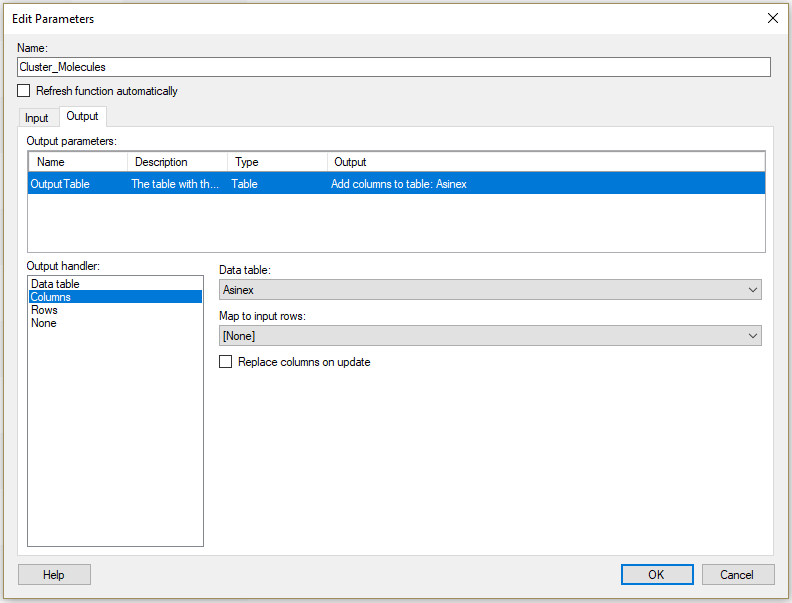

Add data using an existing TIBCO Spotfire® data table

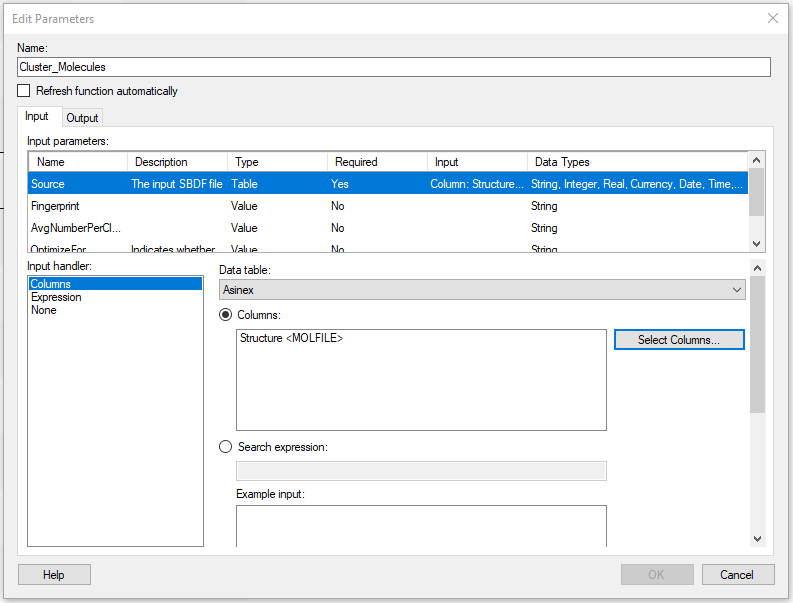

Following the same steps as before, insert now the Pipeline Pilot Data Function

"Cluster_Molecules". As you can see in the "Edit Parameters" window or in the corresponding

Pipeline Pilot protocol, this Data Function needs a source as mandatory input parameter.

Other input parameters are not required.

Select at least the column "Structure

Concerning the output parameter, we will now add the computed data to the existing data table Asinex as new columns: